RL for Sparsity

RL for SparsityAbstract

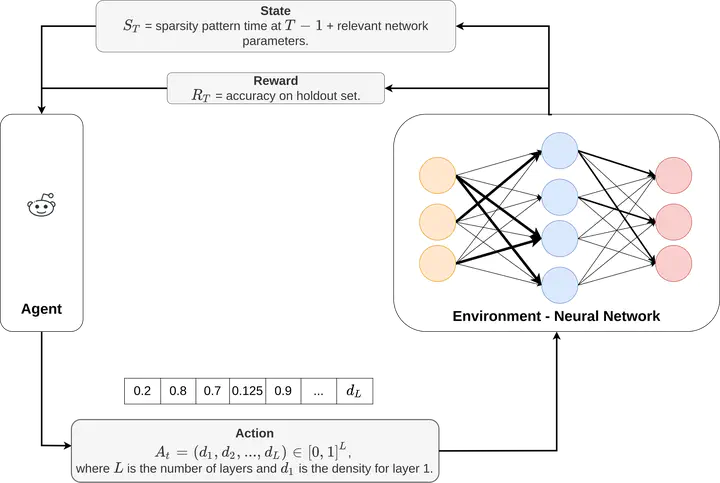

Sparse neural networks have various benefits such as better efficiency and faster training and inference times, while maintaining the generalization performance of their dense counterparts. Popular sparsification methods have focused on what to sparsify, i.e. which redundant components to remove from neural networks, while using heuristics or simple schedules to decide when to sparsify (sparsity schedules). In this work, we focus on learning these sparsity schedules from scratch using reinforcement learning. More precisely, our agents learn to take actions that alter the sparsity level of layers in a network, while observing the changes in the dynamics of the networks and receiving accuracy as a reward signal. Our work shows that commonly used sparsity schedules are naive and that our learned schedules are diverse across training steps and layers in a network. In simple networks and ResNets, we show that our learned schedules are robust and consistently lead to better performance when compared to naive handcrafted schedules. This work emphasizes the importance of introducing and removing sparsity at the correct moments in training.